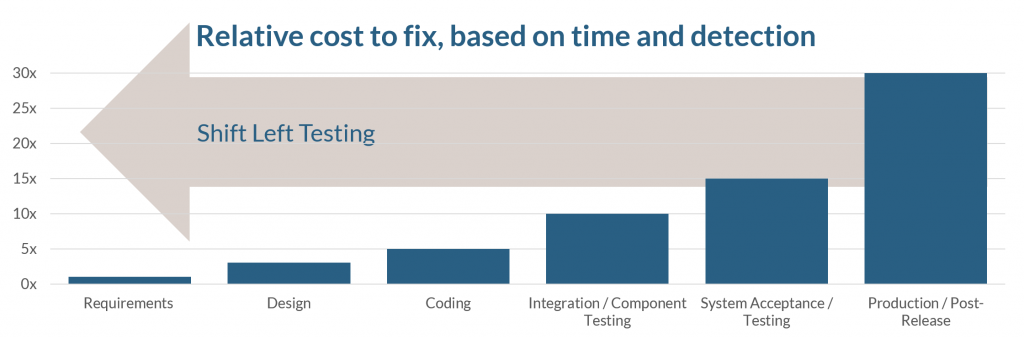

The cost of fixing software is well understood. Much research has been done to demonstrate that fixing defects at the time of introducing them is significantly cheaper than fixing them later in the process, such as system tests or even worse postproduction (think recalls). The number of recalls linked to electronic failures has risen by 30 % a year since 2012, compared with an average of 5 % a year between 2007 and 2012, according to data from consultancy AlixPartners. The research firm J.D. Power, through its Safety IQ application, found that there have been 189 distinct software recalls issued over five years—covering more than 13 million vehicles. These weren’t merely interface-related issues either; 141 of these presented a higher risk of crashing.

Coupling the growing post product defect issue with our understanding that the cost of finding and fixing defects grows significantly the later in the software development lifecycle we identify the defect.

This raises two questions:

- How can we Shift Left our Quality and Security Assurance (QSA) activities in the Software Development Lifecycle (SDLC)?

- How can we enable developers to apply QSA gateways before sharing the code with the rest of the team? E.g. if developer A introduces technical debt and shares these changes with developer B, the technical debt has now been in-directly inherited by developer B

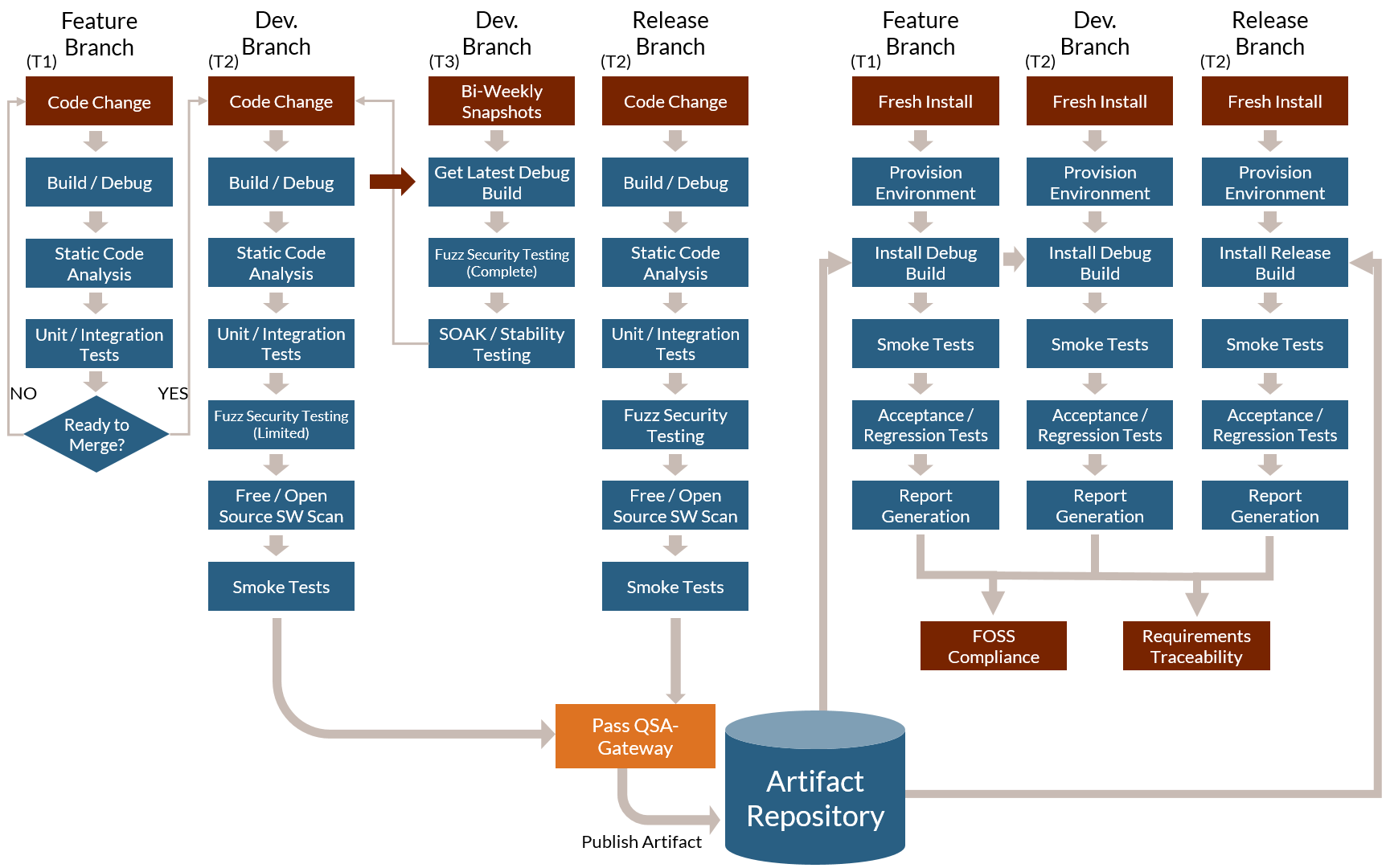

In our article ‘The safe & secure software factory’ we discussed the concept of QSA-Gateways. We can build on an existing concept known as TimeZero (T0) which is used to describe running parts of the QSA process at the developers’ desktops (at time zero, when the code is being developed). We can extend this definition and apply it not just to developers but to the continuous integration process. The result of T(x) is the ability to Shift Left all possible quality and security activities optimally.

This does not, however, answer question 2:

How can we isolate, verify and then merge developer changes?

The notion of ‘isolate, verify, merge’ is that when a developer introduces technical debt (e.g. through introducing a failing build, new static analysis issues, failing unit test cases, unapproved open source component) and merges this with a shared development branch, all members working on that development branch will now inherit this technical debt. Similarly, others in the team will likely repeat this and accumulate more technical debt. Now we are in a position where we are working to repay technical debt continuously, and ownership of debt is unclear. What if we could ensure that a developer or a group of developers working on a feature together pay their technical debt before sharing their code with the rest of the team? If we enable developers to isolate their changes and if we enable the verification process to be automated with QSA gateways at different stages, then we enable an approach to settle our technical debt before merging them in, allowing the team to focus on features, not repaying debt.

Example: Given tool x, the function T(x) will produce a number (typically between 0 and 4) that can communicate with your team at exactly which point in the Continuous Integration process we will run the analysis for that tool. Given that developer A creates a branch for their feature (isolate their change) and has made this branch available on the server repository (making it available to the team and thus our Continuous Integration server) we can now leverage the full power of automation to verify developer A’s changes before they are allowed to merge them into shared branches (such as the develop branch, where developer B will inherit code from). This checkpoint in our Continuous Integration process follows T0, so we have called it T1.

The real advantage with T1 is that T0 should not be used as a Gateway. Albeit a subjective view, stopping developers from checking in or committing their code is not a viable solution. If a developer is permitted to check-in code with potential technical debt, then we can use T1 to verify this and share and collaborate upon the results with the team. Further to this, we typically run several types of analyses (such as architectural compliance checking, multiple static code analysis tools) that we may not want to burden the developer with running locally on their machine as part of T0.

What we are ultimately creating here is a continuous delivery (CD) strategy. CD can be achieved using a development pipeline. A development pipeline breaks down the build of the software into several parts (in general); Build Automation, Continuous Integration, Test Automation, and Deployment Automation.

The idea here is that periodically (ideally on every commit) the full development pipeline is run, with the idea that any issues can be quickly identified. The main challenge is that as the complexity of the system grows, the time taken to run a full development pipeline can grow exponentially until the time taken is so long, that batches of commits need to be validated together. This brings us back to the original issue that when something incorrect is identified, we have to work backwards then to determine which update/commit caused the breakage and invest time to revert this out of the current release branch, so the rest of the commits can progress.

The QSA gateways can be used to identify software that is not fit for purpose at the various states of the development pipeline and automatically progress or revert commits based on their assessment of quality. We are building a tier of pipelines, with gated progression to the next level, until finally being merged into the main release branch.

Let us look at how we bring these pieces together.

Creation of a complete CD pipeline workflow

Development Branch

By applying the concept of ‘Isolate, Verify & Merge’, we can create the basic building block for moving our organization towards Continuous Integration & Continuous Delivery (CI/CD).

Benefits of the ‘Isolate, Verify & Merge’ concept

- Faster time to market: Within a normal software delivery lifecycle, it is normal for the phases associated with integration and test/fix to consume weeks if not months. When teams work to automate their build, deployment, validation and provisioning, developers can incorporate regression and integration tests into their daily work. As a result, less issues will be found and have to be fixed at the end of the project. Currently only 39% of embedded projects complete on time.

- Higher quality: With automation in place, it is much easier for developers to identify regressions in their software within minutes, freeing them from the complex fault finding activities they would typically need to undertake. The time can be used to focus efforts on user research, exploratory testing, usability testing, as well as performance and security testing.

- Reduced costs: The typical software project will evolve over time, by automating the process for build, test and deployment, we can deliver incremental changes that will be much cheaper to validate and release

- Low-risk releases: One of the main goals of a CI/CD process is to make software deliveries painless low risk events that can be done on demand. Incorporated with blue-green deployments where the application release model incrementally transfers user traffic from a prior version of an application to a nearly identical new release – both of which are running in production. Using this workflow, it is relatively straightforward to achieve zero-downtime deployments that are undetectable to users.

- Better products. Continuous delivery makes it feasible to work in small batches. By working this way it is possible to get feedback from users throughout the delivery lifecycle based on working software. This can help organizations avoid developing features that deliver zero or negative value to the business. A common technique is A/B testing, which allows an organization to take a hypothesis-driven approach to product development where ideas can be tested with users before building out whole features.

- Happier teams. When organizations release more frequently, software delivery teams can engage more actively with users, learn which ideas work and which don’t, and see first-hand the outcomes of the work they have done. By automating or removing the low-value painful activities associated with software delivery, organizations can focus on what they care about most – continuously delighting our users.

Further readings and download:

- Free whitepaper download: Building the software factory

- How SpaceX develops software

- Building the ultimate CI machine

- Convincing reasons to adopt code coverage tools

- Prevent “quality deficit” in your software

- The safe & secure software factory

- Illuminating system integration

- Technical debt in the world of Internet of Things / IoT