This interview answers the question “How can I reduce the testing effort for the software of my IoT system?” The expert from Vector explains, how to identify issues in IoT software before they lead to high-cost incidents during production and gives practical advice on optimizing IoT test activities.

Daniel: I think we don’t need to talk about the fact that software – like any product – should be thoroughly tested before it is shipped to customers. But what’s special about testing software in the field of IoT?

Jochen: IoT systems use a combination of software and hardware. Thus, issues in the software part sometimes also propagate to the hardware part. However, the hardware is often not yet available at the beginning of a project. Apart from that, IoT systems could also be seen as “cyber-physical systems”, which means they interact with the physical world. The software that runs on such systems has to process physical data such as temperatures, velocities, accelerations, angles, and so on. This data is usually measured continuously and has to be processed in real-time. However, these characteristics of IoT systems should not stop us from software testing at an early stage – even without hardware.

Daniel: So what is your approach to testing IoT systems?

Our approach is to first provide a plausible simulated system environment where you can embed your SUT (Software/System Under Test) so that it behaves just like in its real environment later on. A big advantage here is that you have full control over the simulation, e.g. in terms of timing, which gives you the ability to perform debugging or fault injection. After the simulated environment is set up, you start implementing your test cases. Such tests could cover corner cases without potentially damaging the hardware. Pure software –the simulation is also software in the end – can easily be „reset“ after a crash, which is usually not the case for real hardware devices.

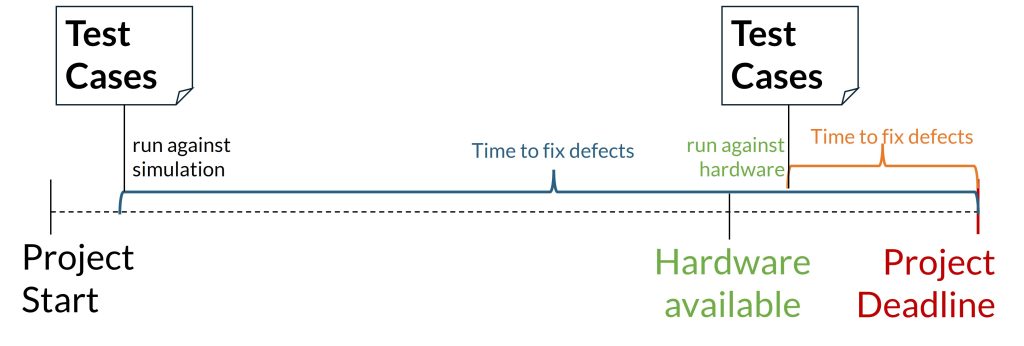

One big barrier to high-quality software that we often see in customer projects is that defects are usually detected very late in the development process of the system. This is usually the case when we wait to write tests until the hardware is ready. At this late stage, errors are already very cost-intensive. They sometimes require redesigning individual hardware components. More importantly, though, they postpone project deadlines, as there is usually not enough time to properly fix these issues at the end of a project.

Daniel: So, software testing is used to reduce costs. On the other hand, software testing itself is often seen as a high cost-driver in IoT projects. What are the best practices to minimize this effort and increase the payback from an investment in testing?

Jochen: Sometimes developers also tend to create ad-hoc software tests instead of relying on proper tool support. However, only the use of good tools leverages the full potential of your IoT tests by applying the following best practices that have already proven to be effective in other fields of software engineering: One is to introduce and follow a test strategy right from the beginning of the project and another one is to integrate automated and reproducible tests into your CI/CD workflows so that you don’t have to think about them all the time. They just run automatically and often, which gives you confidence and lets you focus on what you really want to do: write good code.

Daniel: What are features that a “proper” IoT testing tool should offer in your opinion?

Jochen: First of all, throughout our IoT development project, we want to have as much automation as possible. This can be achieved by integrating tests into a continuous integration and deployment pipeline, as already mentioned. Yes, this is possible – even for IoT tests! Additionally, the tool should provide a flexible way to set up the above-mentioned simulation of the system environment. This can be supported by allowing to integrate Simulink models or FMUs, standardized interfaces and APIs to connect to other tools, or the possibility to program environment or application models in common programming languages such as Python or C#.

At some point, we obviously want to exchange these simulations with actual physical hardware. In an ad-hoc test process, you typically start rewriting your tests from scratch in this step. Why? Hardware uses different means of communication than a simulation. And here comes another cool feature of a proper testing tool: It should allow to decouple the implementation of test code from the actual communication protocols used by the concrete hardware. This is why we as Vector have introduced a high-level application specification of communication to easily switch between different endpoints. By developing our tests against such an application layer, we can reuse test implementations written for the simulation to run the same test code against the actual hardware when it is ready.

On top of these features, a proper test tool gives you a nice report about the results of the executed tests and also a link to a requirements management system to manage test specifications as well as test reports.

Daniel: Sounds like a lot of tedious and repetitive work that can be automated with the right tools. What role do you think standards play in properly testing IoT systems?

Jochen: Using standardized communication is a prerequisite to the reusability of test code. A common, standardized representation of data also makes it easier to understand communication endpoints and develop software that uses these endpoints. Therefore, standards-based communication must be considered during system design – similar to the testability of a traditional software architecture. By the way: We as a tool provider are always interested in standards because we can just integrate them into our tools and our customers can use them right away. Of course, we – or the customers themselves – can always build in something specific, but then we lose some flexibility and interoperability.

Daniel: What types of standards do you see as relevant for developing IoT systems?

Jochen: First of all, the protocols used by different communication partners have to be compatible. In the IoT domain, there are numerous standards established for such communication protocols, from MQTT and DDS to Bluetooth Low Energy, just to name a few representatives.

The data that is sent via these standardized communication channels should also have a common structure and file format. OPC-UA is nowadays the de-facto standard for representing device data in the IoT domain. For representing data in the cloud, digital twin languages such as the asset administration shell or digital twin definition language are becoming more and more prominent.

However, even if data is communicated via a standardized protocol in a standardized language, different naming of the same properties in different systems still make it hard to correctly interpret data. The unified namespace offers an approach for such common naming among communication interfaces for an overall IoT system. Such naming is however usually depending on the particular domain. This is why domain-specific standardization efforts are crucial these days – from the submodel templates of the asset administration shell to the numerous standards that are published and maintained by organizations like OMG or INCOSE. Of course, we still have some way to go to support all these concepts.

The test case implementation is decoupled from the concrete communication protocol. The application layer provides a generic interface. All protocol specifics are handled implicitly by the testing tool. This works for different hardware (i.e., robots in this case) that uses different means of communication, but also for running the same test case on both simulation and the respective hardware, once it’s ready.

Daniel: Can you give some practical advice that our readers can use to get started efficiently testing IoT software?

Jochen: I believe that efficient software testing for IoT can be broken down into the following rules of thumb:

Test Early, Test Often. The earlier you identify defects in your code, the cheaper it is to fix them – particularly in IoT systems. Using simulations, you can already start testing during system design. The right tools even offer you debugging and fault injection possibilities at that stage.

Reuse Whenever Possible. When you start using standardized communication, proper IoT testing tools make it easy for you to switch between simulations and physical devices without rewriting any code.

Automate Wherever Possible. With the right tools, you can use best practices from traditional software engineering, such as continuous integration and deployment, for your IoT systems.

Further readings / information:

- IoT test beds: 4 typical setups

- Testing IoT systems: Challenges and solutions

- Testing in virtual environments

- Debugging software in virtual environments

- Product information: IoT Connectivity Hub

- Product information: Develop and Test Software of Distributed Systems with CANoe4SW