My post series is explaining what tests should be applied to an avionics app – in my example to simulate the Instrument Landing System (ILS). What is necessary to satisfy both safety-critical and security requirements?

Read about the basics in my previous post Security and safety requirements to test an app connected to avionics.

In this blog post, you will learn about the verification methodology developed to satisfy DO-178C and OWASP standards, and its execution to verify the software requirements and the architecture components on a dummy application.

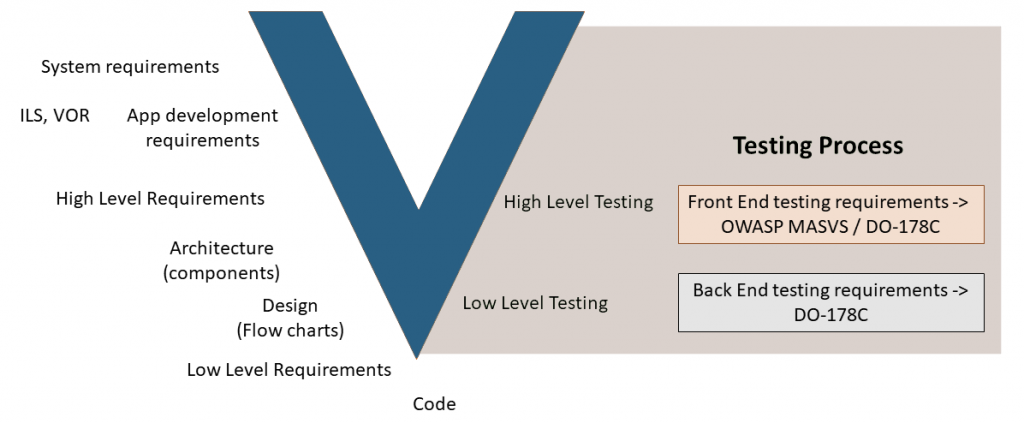

Include DO-178C and OWASP in the V-Model

The following figure shows the overall design process, system-level requirements, going down to design and code, ending on the right part of the V-Model: the testing process.

In the following sections, you will find life cycle examples of every one of the V-model components.

The avionics app architecture

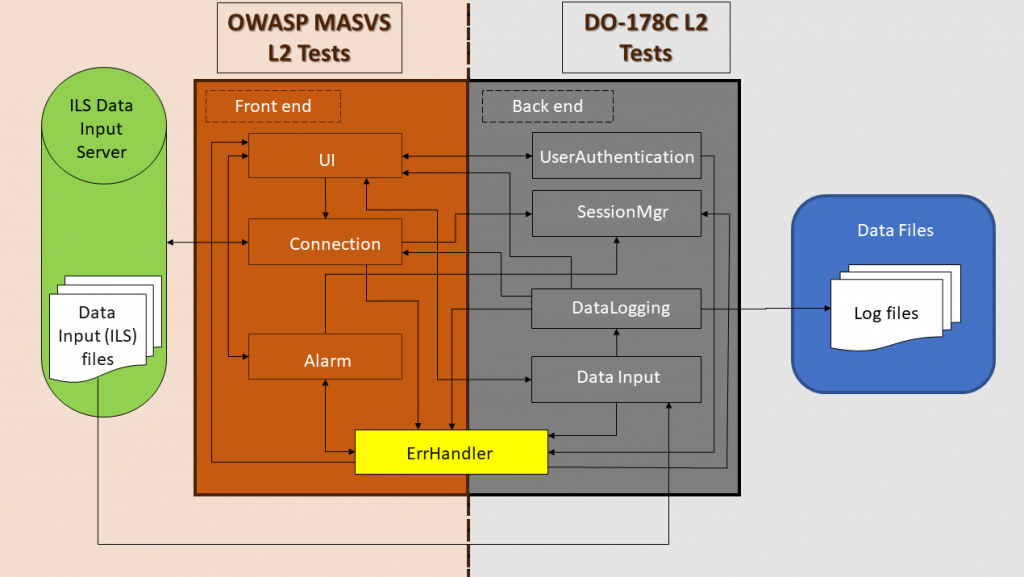

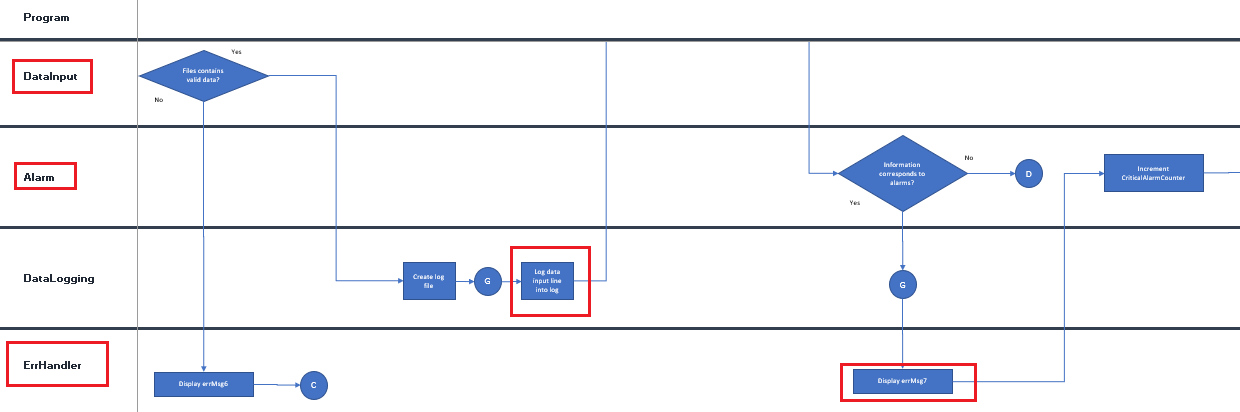

Before explaining the requirements decomposition, it is important to look at the application architecture, containing Front end and Back end components:

The architecture defined includes eight components divided into front and back end modules and the ErrHandler, that works across both ends.

Requirements decomposition

The requirements are related to the correspondent components and are decomposed from High-Level Requirements (HLRs) to Low-Level Requirements (LLRs):

| Requirement | Component | Component description | Back end / Front end |

| HLR-Marker-4: MARKER shall set MB_Display to Outer when MB_Signal is equal to 1 | Alarm | Triggers the three types of alarms according to the data input. | Front end |

| LLR-BackEnd-7: DataInput shall validate the data consistency when a data file is selected on screen3 | DataInput | Option the available list of data files and verify correctness data input. | Back end |

| LLR-Functional-18: ErrHandler shall display errMsg7 when (localizer is not centered or glide slope is not centered) and (marker beacon is an outer marker for 1 minute) and (user not depressing landed button) | ErrHandler | Display error and alerts on the screen. | Front and back end |

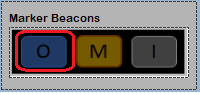

In this case, HLR-Marker-4 indicates that we receive information from the ILS [x] indicating an outer beacon, meaning that the Aircraft is at 1.85 km from the runway:

This HLR is decomposed into the LLRs LLR-BackEnd-7 and LLR-Functional-18.

It is important to understand this requirements decomposition process as all verification activities are related to it.

Wait! Did you create low-level requirements without design?

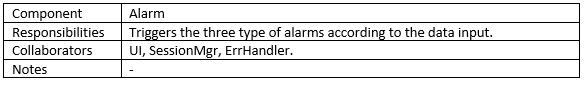

No! To fulfill DO-178C the following low-level design was created. The table below describes the architecture and its components, the components description and how are interfaced, and the related low-level requirements.

| The architecture | Shown in figure 1 |

| Component description |  |

| An interfaces diagram |  |

| LLRs | As the ones recently explained (LLR-BackEnd-7, LLR-Functional-18) |

Ok, requirements understood.

And the tests for DO-178C and OWASP?

Once the requirements were defined, I will proceed to explain some examples, how the requirements are traced to DO-178C and to OWASP MASVS.

| Requirement | Back end / Front end | Verification standard | Testing guide |

| HLR-Marker-4 | Front end | OWASP MASVS | MSTG-ARCH-1 All app components are identified and known to be needed. MSTG-ARCH-3 A high-level architecture for the mobile app and all connected remote services has been defined and security has been addressed in that architecture. |

| LLR-BackEnd-7 | Back end | DO-178C | Independent testing from development decision condition coverage review the design and code high and low-level requirements testing |

Let’s now explain the verification process performed to satisfy the items described in the Testing Guide column.

Peer reviews

Checklists were created to verify the artifacts and process. The following charts describe every checklist and examples of defects identified. This review process of the documents satisfies one of the DO-178C verification goals.

| Template | Description | Examples of issues reported |

| A – ILS SVP Review Report.xlsx | Review of the application software verification plan | Does the SVP (Software Verification Plan) provide clear expectations and responsibilities of the verification and validation organization? NO: It is recommended to include a technical design review participants table as an appendix |

| B – Software Requirements Review Report.xlsx | Review of the software requirements | Are the requirements atomic enough? NO: All the requirements under this section have a lot of text. A lot of it seems to be information that should go in a comment. It is a good practice to keep the requirement as atomic and simple as possible. |

| C – Integration Review Report.xlsx | Review of the software integration | Are all software components integrated? NO: Refer to PRs ILS-PR-0006 and ILS-PR-0008 |

| D – ILS Test Cases Review Report.xlsx | Review of the high level and low-level test vases | Does the test case include test steps or scenarios? NO: Consider explaining additional configuration steps or referring to a generic configuration procedure. |

| E – ILS Test Procedures Review Report.xlsx | Review of test procedures | Does the test procedure include detailed instructions for the set-up and execution? NO: Details should be described in order to set the GS signal and the equipment intended to carry the ILS application. |

| G – ILS Application Usability Review Report.xlsx | Reviews of the usability guidelines | Can the homepage be grasped in 5 seconds? N/A: No need for a homepage |

| H – ILS PR Report – Aug9.xlsx | Problem report (of SW Defects) | ILS-PR-0004 Back-end tests cannot be tested as files are accessed locally and there is no access to the database |

DO-178C and OWASP test procedures

Looking forward to covering 100% of the High- and Low-Level requirements, test procedures were created to verify the HLRs and LLRs:

- Glide Slope

- Localizer

- Marker Beacons

- Back End

- Front End

- Security

- Storage

- Usability

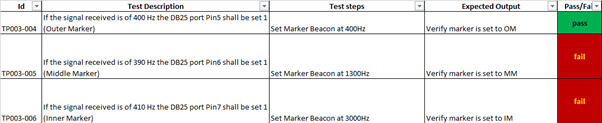

The following are examples of the test cases created to verify the requirements HLR-Marker-4 and LLR-BackEnd-7, described in Table 2.

ILS-TP003-Marker Beacons

The purpose of this test procedure is: Verify the Outer, Middle and Inner Market

Above figure shows the test cases 004, 005 and 006 traceability to HLR-Marker-4 and the tests failed. These failures were documented in the ILS-PR-0002.

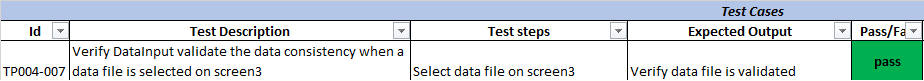

ILS-TP004-Back End

The purpose of this Test Procedure is: To verify the server-side of the application app.

In this case, the test case validated the data consistency of a data file.

Are DO-178C and OWASP satisfied?

This is the most crucial aspect of this research. The following matrix demonstrates how the OWASP MASVS and DO-178C standards were fulfilled:

| OWASP MASVS | MSTG-ARCH-1 | C – Integration Review Report.xlsx |

| MSTG-ARCH-3 | C – Integration Review Report.xlsx | |

| DO-178C | Independent Testing from Development | ILS Test Procedures 001 |

| Decision Condition Coverage | ILS Test Procedures 001 | |

| Review the Design and Code | B – Software Requirements Review Report.xlsx D – ILS Test Cases Review Report.xlsx E – ILS Test Procedures Review Report.xlsx G – ILS Application Usability Review Report.xlsx | |

| High and Low-Level Requirements Testing | ILS Test Procedures 001 H – ILS PR Report – Aug9.xlsx |

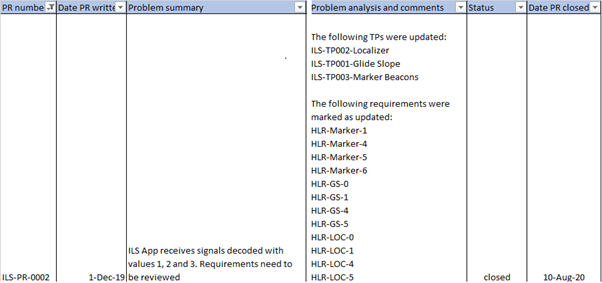

Software defects: DO-178C requires a problem report

Never, never, forget about documenting the defects.

DO-178C requires to create a Problem Report (PR) on formal tests (production software) for every defect found, including:

1. PR number

2. Date PR written

3. Problem summary

4. ……

14. Problem analysis and comments

15. Status

16. Date PR closed

Yes, 16 items are a very large list, but remember, we are verifying safety-critical software.

Below you find a graphical example showing those six items from ILS-PR-0002 (from the test cases TP003-005 and TP003-006 mentioned above):

Conclusions – Test passed for DO-178C and OWASP

This was a long way from state-of-the-art research about verification methods for applications connected to avionics, to later understanding which safety-critical and security requirements need to be verified.

Finally, this article showed the requirements and architecture developed for an app simulating an Instrument Landing System, as well as the test suite executed to demonstrate the software fulfills with DO-178C and with OWASP MASVS, resulting in a safe and secure airborne application.

Remark: This article is just a reference for developers and does not represent formal compliance with requirements. Formal Stage of Involvement (SOI) with a certification authority is required to certify any avionics-related module.

P.S: Hey Adriana, we made it! Thanks for your mentoring and support to develop this test methodology!!!

Further readings and references

- [1] Software Architecture Review and Assessment (SARA) Report

- [2] Back-end testing to storage requirements at Testlio – Mobile tests types and approaches

- [3] Localizer and Glide/Slope at Ground-Based Navigation – Instrument Landing System (ILS)

- [4] Marker Beacons at FAA Order 6750.24E

- [5] OWASP Mobile Testing Guide

- [6] RTCA DO-178C, Software Considerations in Airborne Systems and Equipment Certification

- Security and safety requirements to test an app connected to avionics – Second blog post of the series

- Why test an app that is connected to avionics? – First blog post of the series